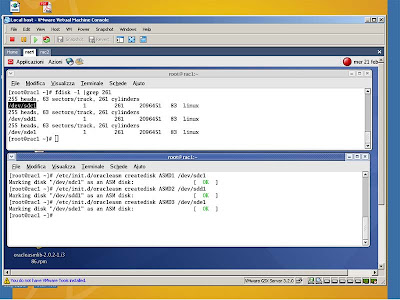

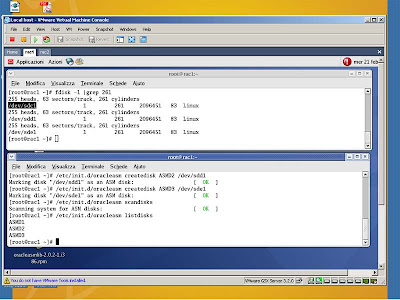

Start your first node and as root user issue:

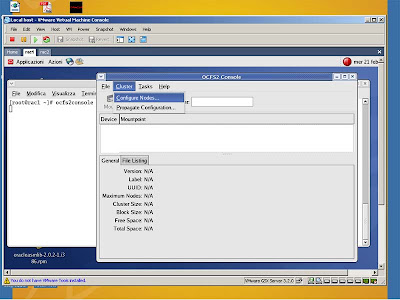

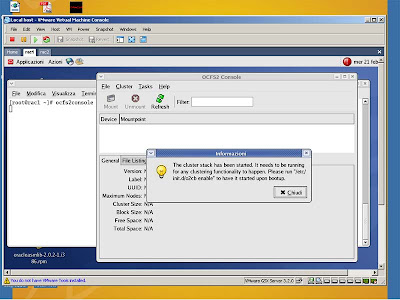

ocfs2consoleocfs2console is a GUI front-end for managing OCFS2 volumes

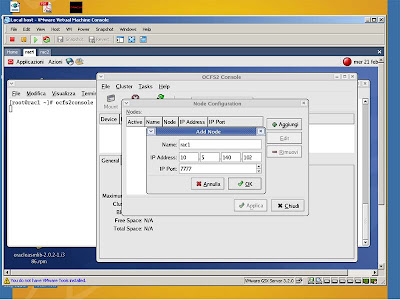

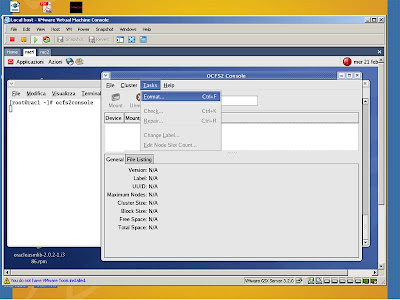

Select from the menù Cluster->Configure Nodes... like in the picture

Select Close on the next popup window like in the picture

Select Add and type your first node IP address and name like in the picture

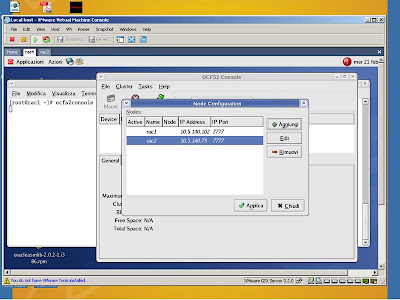

Select Add again and add your second node IP address. Your final configuration shuold be like in the picture.

Start your second node and wait for the welcome screen.

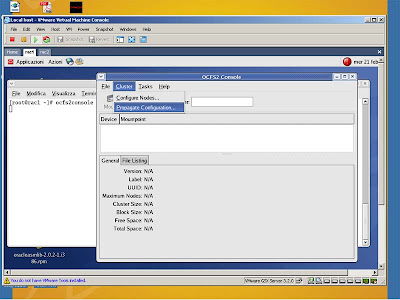

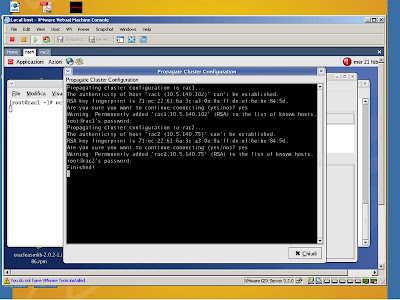

From your first node, select from the Menù Cluster->Propagate Configuration... to copy the file /etc/ocfs2/cluster.conf located on your first node to the second node.

In fact before you select the propagation command from your second node ou can see that the /etc/ocfs2/cluster.conf file doesn't exist. The propagation command will use the ssh tool, but with your root account to login into the other node. So you have to give the root password to estabilish the secure copy from rac1 to rac2 like in the picture. When you see Finished! you can close that terminal window.

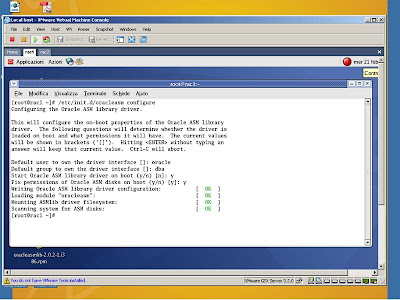

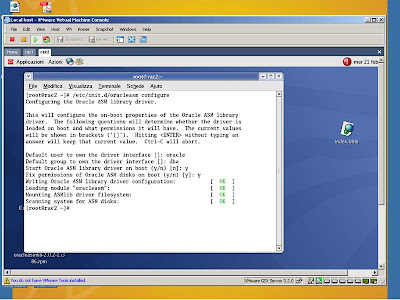

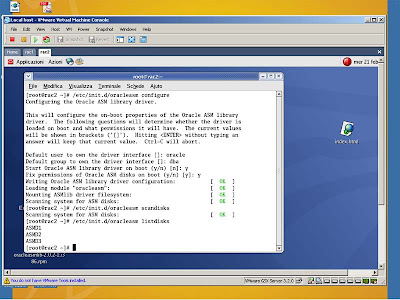

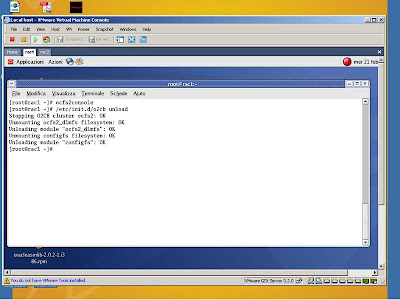

The next step is to configure the o2cb driver (do you remember when we closed the popup window???). At that time we loaded that driver, now to configure it (on both nodes) we have first to unload it, so as root user type:

/etc/init.d/o2cb unload

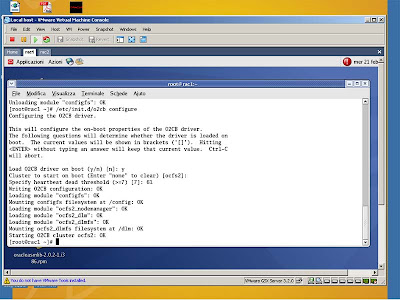

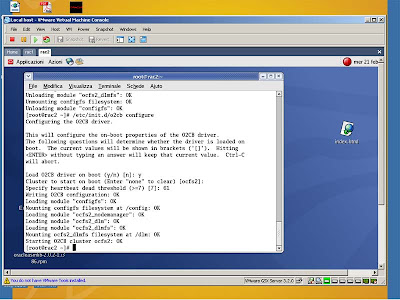

and then

/etc/init.d/o2cb configureType y, enter, 61 (the fence time will be: (heartbeat dead threshold-1)*2=> (61-1)*2 = 120 seconds)

Perform the same actions on the second node!!!

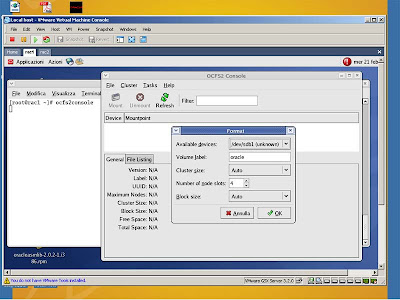

Only from the first node now we have to format the file system, so type again ocfs2console from a root terminal and select from Menù Task->Format... like in the picture.

Select Ok and then confirm the format process clicking on YES button. Perform these actions only from one node!!!

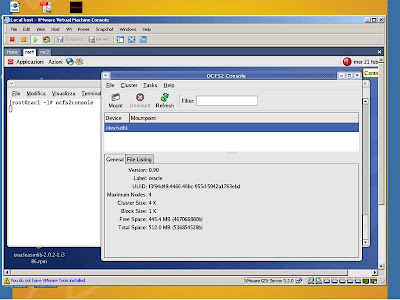

You should now see something like the following picture.

Now from both nodes we have to mount the file system and then to mount it on boot so type:

mkdir /ocfs

mount -t ocfs2 -o datavolume,nointr /dev/sdb1 /ocfs

and then, again from both nodes, add in /etc/fstab file the following line

/dev/sdb1 /ocfs ocfs2 _netdev,datavolume,nointr 0 0

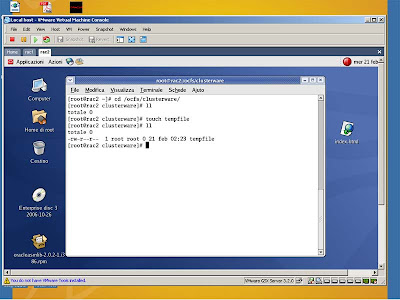

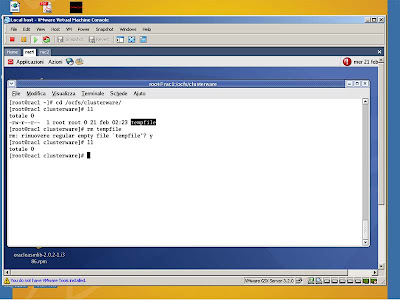

From only the first node I will create the directory for the OCR and Voting Disk files, so I issue the following commands:

mkdir /ocfs/clusterware

chown -R oracle:dba /ocfs

Now you should be able to read and write into that directory from both nodes.